The Testkube Agent

Overview

The Testkube Agent is 100% Open Source and includes the Testkube execution and orchestration engine (with some limitations). It is always hosted in your infrastructure and can be deployed in two modes:

- Standalone Mode - not connected to a Testkube Control Plane.

- Connected Mode - connected to a Testkube Control Plane.

This document shows how to use the Agent in Standalone mode, see the corresponding documentation for On Prem and Cloud deployment of the Testkube Control Plane to learn how to use the Agent in Connected Mode.

See the Feature Comparison to understand the differences in functionality between these two modes.

Running in Standalone Mode

When running the Agent in Standalone Mode there is no Dashboard and it has to be managed entirely through the Testkube CLI.

The following functionality is available directly in the agent in Standalone Mode

- Test Workflows : Manage Workflows and Templates, Run/Schedule executions (see below for limitations).

- Logs/Artifacts : Retrieve Workflow executions, logs, artifacts via CLI or API.

- Webhooks : Manage Webhooks that the Agent executes.

- Event Triggers : Manage Event Triggers that the Agent reacts to.

- Tests, TestSuites, Sources, Executors : Deprecated - but still available during a transition period - Read More.

Agent Limitations in Standalone Mode

The following Workflow features are not available when using the Open Source Agent without connecting it to a Testkube Control Plane:

- Complex Test Orchestration with

execute- see Test Suites - Parallel execution with

parallel- see Parallelization - Parameterization with

matrix(andcount,shards,maxCount) - see Sharding & Matrix Params - Spawning dependencies for your tests with

services- see Services - Concurrency Policies with

concurrency- see Concurrency

Deploying the Testkube Agent in Standalone Mode provides extensive test execution capabilities even without these features available, check out the Open Source Overview to get started.

Installing the Standalone Agent

The following steps are required to install the Standalone Agent into a Kubernetes Cluster:

- Create a Testkube namespace.

- Deploy the Testkube API (see below).

- Use MongoDB or PostgreSQL for test results and Minio for artifact storage (optional; disable with --no-minio).

- Testkube will listen and manage all the CRDs for TestWorkflows, Triggers, Webhooks, etc… inside the Testkube namespace.

Once installed you can verify your installation and check that Testkube is up and running with

kubectl get all -n testkube. Once validated, you're ready to unleash the full potential of Testkube in your environment.

Testkube OSS is here to help you to powering your development and testing workflows seamlessly.

With the CLI

You can install the standalone agent by executing the following command.

By default it will install within the testkube namespace for your

current Kubernetes context.

testkube init

With Helm

helm upgrade --install \

--create-namespace \

--namespace testkube \

oci://us-east1-docker.pkg.dev/testkube-cloud-372110/testkube/testkube --version <version>

By default, the namespace for the installation will be testkube. If the testkube namespace does not exist, it will be created for you.

Alternatively, you can customize the default values.yaml by first fetching the Helm chart, unpacking it, modifying the values.yaml, and then installing it from the current directory:

helm pull oci://us-east1-docker.pkg.dev/testkube-cloud-372110/testkube/testkube:<version>

tar -xzf testkube-<version>

cd testkube/

helm install testkube . --create-namespace --namespace testkube --values values.yaml

The Helm Chart Docs include a list of all available values properties.

Upgrading

See upgrade for instructions on how to upgrade the standalone agent.

Uninstalling

With the CLI

testkube purge

With Helm

helm delete --namespace testkube testkube kubeshop/testkube

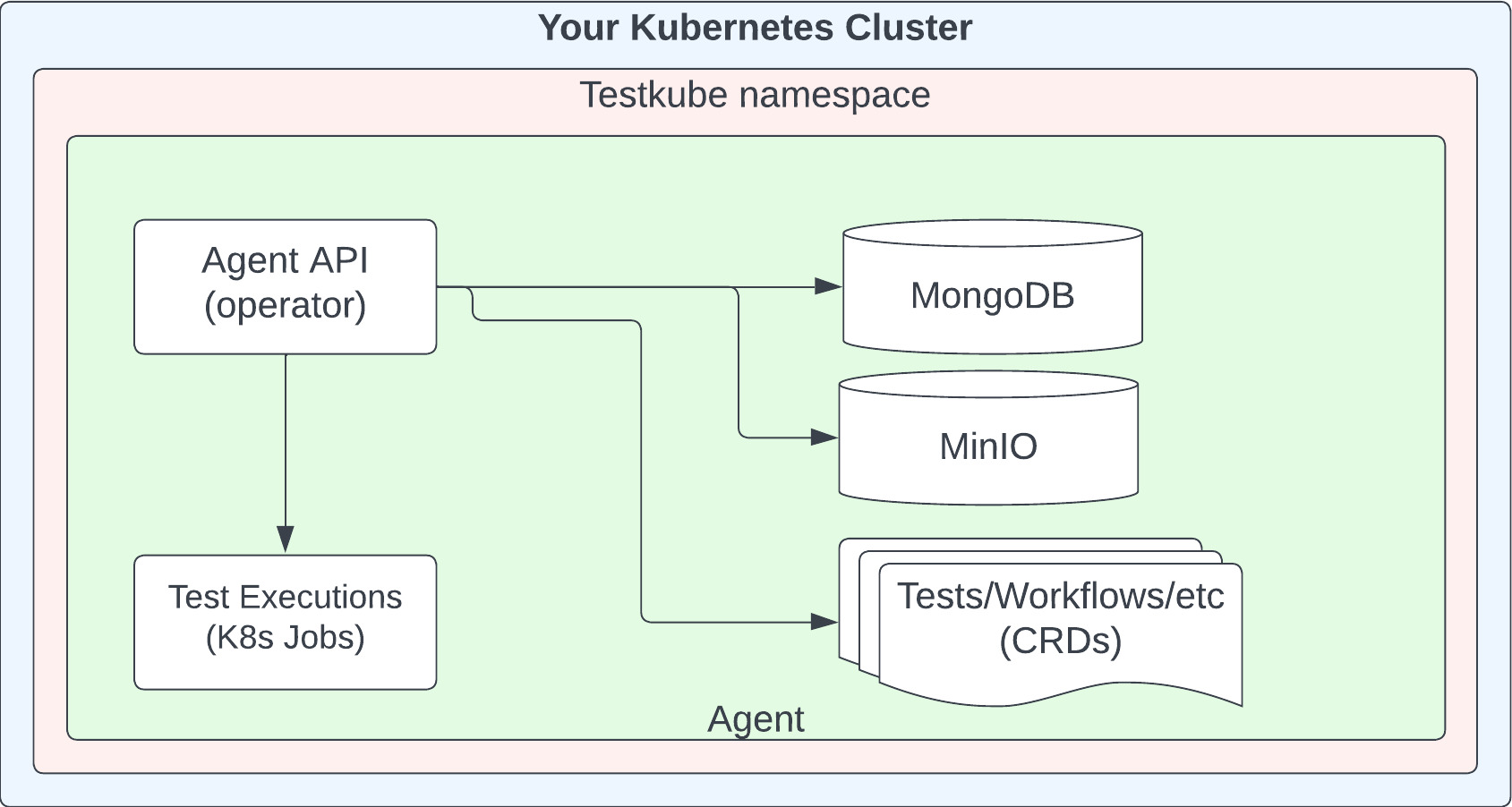

Deployment Architecture

A high-level deployment architecture for Standalone Agent is shown below.

The Testkube CRDs managed by the Operator are described in Testkube Custom Resources.

Connecting to the Testkube Control Plane

You can connect a standalone Agent to an instance of the Testkube Control Plane to leverage corresponding functionality (see Feature Comparison). All Workflow/Trigger/Webhook definitions will be preserved, but historical test execution results and artifacts won't be copied to the control plane.

The following command which will guide you through the migration process:

testkube pro connect

To complete the procedure, you will finally have to set your CLI Context to talk to Testkube.

Advanced

Log Storage

Testkube can be configured to use different storage for test logs output that can be specified in the Helm values.

## Logs storage for Testkube API.

logs:

## where the logs should be stored there are 2 possible valuse : minio|mongo

storage: "minio"

## if storage is set to minio then the bucket must be specified, if minio with s3 is used make sure to use a unique name

bucket: "testkube-logs"

When mongo is specified, logs will be stored in a separate collection so the execution handling performance is not affected.

When minio is specified, logs will be stored as separate files in the configured bucket of the MinIO instance or the S3 bucket if MinIO is configured to work with S3.

Artifact Storage

Testkube allows you to save supported files generated by your tests, which we call Artifacts.

The engine will scrape the files and store them in Minio in a bucket named by execution ID and collect all files that are stored in the location specific to each workflow.

The available configuration parameters in Helm charts are:

| Parameter | Is optional | Default | Default |

|---|---|---|---|

| testkube-api.storage.endpoint | yes | testkube-minio-service-testkube:9000 | URL of the S3 bucket |

| testkube-api.storage.accessKeyId | yes | minio | Access Key ID |

| testkube-api.storage.accessKey | yes | minio123 | Access Key |

| testkube-api.storage.location | yes | Region | |

| testkube-api.storage.token | yes | S3 Token | |

| testkube-api.storage.SSL | yes | false | Indicates whether SSL communication is to be enabled. |

The API Server accepts the following environment variables:

STORAGE_ENDPOINT

STORAGE_BUCKET

STORAGE_ACCESSKEYID

STORAGE_SECRETACCESSKEY

STORAGE_LOCATION

STORAGE_REGION

STORAGE_TOKEN

STORAGE_SSL

Which can be set while installing with Helm:

helm install testkube oci://us-east1-docker.pkg.dev/testkube-cloud-372110/testkube/testkube --version <version> --set STORAGE_ENDPOINT=custom_value --create-namespace --namespace testkube

Alternatively, these values can be read from Kubernetes secrets and set:

- env:

- name: STORAGE_ENDPOINT

secret:

secretName: test-secret

Deploying on OpenShift

To install the standalone agent Testkube on an Openshift cluster you will need to include the following configuration:

- Add security context for MongoDB or PostgreSQL to

values.yaml:

mongodb:

securityContext:

enabled: true

fsGroup: 1000650001

runAsUser: 1000650001

podSecurityContext:

enabled: false

containerSecurityContext:

enabled: true

runAsUser: 1000650001

runAsNonRoot: true

volumePermissions:

enabled: false

auth:

enabled: false

- Add security context for

PatchandMigratejobs that are a part of Testkube Operator configuration tovalues.yaml:

testkube-operator:

webhook:

migrate:

enabled: true

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop: ["ALL"]

patch:

enabled: true

securityContext:

runAsNonRoot: true

runAsUser: 1000650000

fsGroup: 1000650000

- Install Testkube specifying the path to the new

values.yamlfile

helm install testkube oci://us-east1-docker.pkg.dev/testkube-cloud-372110/testkube/testkube --version <version> --create-namespace --namespace testkube --values values.yaml

Please notice that since we've just installed MongoDB with a testkube-mongodb Helm release name, you are not required to reconfigure the Testkube API MongoDB connection URI. If you've installed with a different name/namespace, please adjust --set testkube-api.mongodb.dsn: "mongodb://testkube-mongodb:27017" to your MongoDB service.

Using PostgreSQL as a database

You can run the Testkube Standalone Agent with PostgreSQL instead of MongoDB. This is currently an experimental feature, and deprecated functionalities are not supported. To enable PostgreSQL, update the values.yaml file in your Helm chart: enable the PostgreSQL settings and disable the MongoDB options.

mongodb:

enabled: false

postgresql:

enabled: true

testkube-api:

mongodb:

enabled: false

postgresql:

enabled: true

dsn: <postgresql dsn (postgres://...)>

Deploying on AWS

If you are using Amazon Web Services, this tutorial will show you how to deploy Testkube OSS in EKS and expose its API to the Internet with the AWS Load Balancer Controller.

Prerequisites

First, we will need an existing Kubernetes cluster. Please see the official documentation on how to get started with an Amazon EKS cluster here.

Once the cluster is up and running we need to deploy the AWS Load Balancer Controller. For more information, see Installing the AWS Load Balancer Controller add-on.

Another important point is ExternalDNS. It is not compulsory to deploy it into your cluster, but it helps you dynamically manage your DNS records via k8s resources.

And last, but not least - install the Testkube CLI. You can download a binary file from our installation page. For how to deploy Testkube to your cluster with all the necessary changes, please see the next section.

Please mind that is it necessary to install EBS CSI driver to mount PV into your k8s cluster.

Ingress and Service Resources Configuration

To deploy and expose Testkube API to the outside world, you will need to create an Ingress resource for Testkube's API server. In this tutorial, we will be updating values.yaml that later will be passed to the helm install command.

In order to use the AWS Load Balancer Controller we need to create a values.yaml file and add the following annotation to the Ingress resources:

annotations:

kubernetes.io/ingress.class: alb

Once this annotation is added, Controller creates an ALB and the necessary supporting AWS resources.

The example configuration using HTTPS protocol might look like the following:

Testkube API Ingress:

uiIngress:

enabled: true

annotations:

kubernetes.io/ingress.class: alb

alb.ingress.kubernetes.io/load-balancer-name: testkube-api

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/backend-protocol: HTTP

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80},{"HTTPS": 443}]'

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/healthcheck-path: "/health"

alb.ingress.kubernetes.io/healthcheck-port: "8088"

alb.ingress.kubernetes.io/ssl-redirect: "443"

alb.ingress.kubernetes.io/certificate-arn: "arn:aws:acm:us-east-1:*******:certificate/*****"

path: /v1

hosts:

- test-api.aws.testkube.io

Once we are ready with the values.yaml file, we can deploy Testkube into our cluster:

helm install testkube oci://us-east1-docker.pkg.dev/testkube-cloud-372110/testkube/testkube --version <version> --values values.yaml --namespace testkube --create-namespace

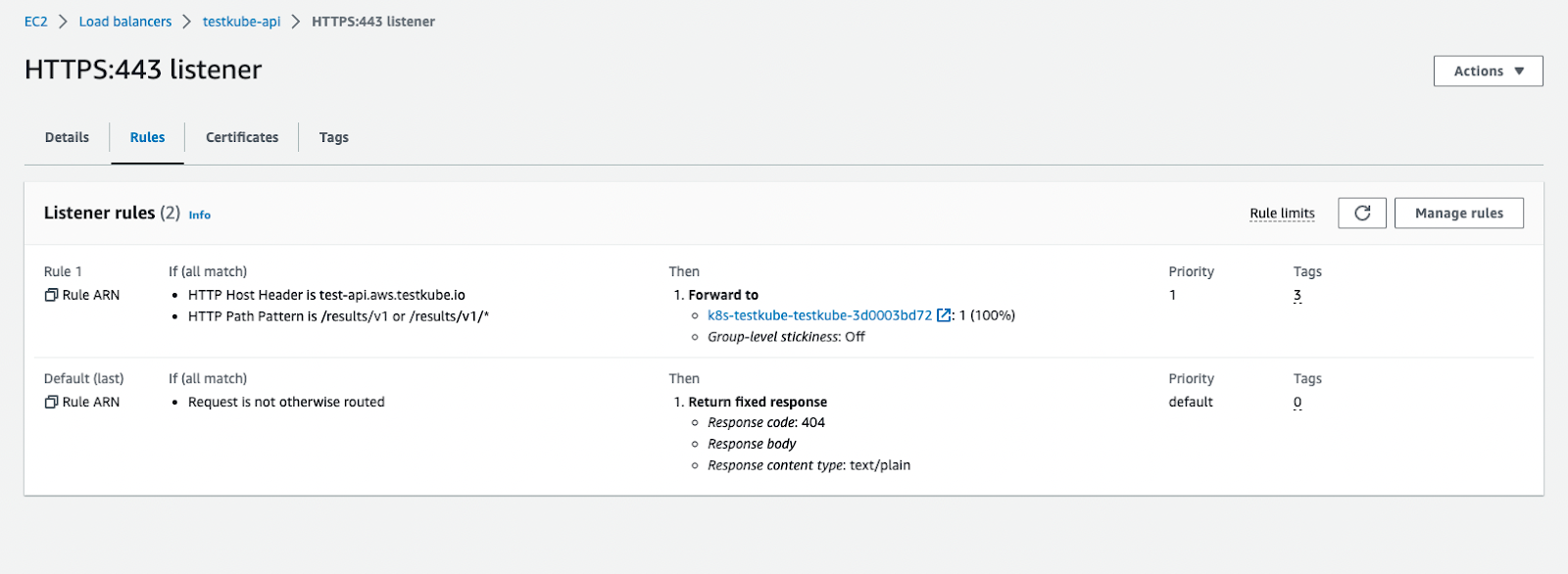

After the installation command is complete, you will see the following resources created into your AWS Console.

Please note that the annotations may vary, depending on your Load Balancer schema type, backend-protocols, target-type, etc. However, this is the bare minimum that should be applied to your configuration.

Except for the Ingress annotation, you need to update the Service manifests with a healthcheck configuration as well. Include the lines below into your values.yaml file.

Testkube API Service:

service:

type: ClusterIP

port: 8088

annotations:

alb.ingress.kubernetes.io/healthcheck-path: "/health"

alb.ingress.kubernetes.io/healthcheck-port: "8088"

Examples of AWS S3 Bucket configuration

If you plan to use AWS S3 Bucket for storing test artifacts, you can follow below examples

Terraform aws iam policy:

data "aws_iam_policy_document" "testkube" {

statement {

sid = "S3Buckets"

effect = "Allow"

actions = [

"s3:ListAllMyBuckets", # see https://github.com/kubeshop/testkube/issues/3965

]

resources = [

"arn:aws:s3:::*",

]

}

statement {

sid = "S3Bucket"

effect = "Allow"

actions = [

"s3:ListBucket",

"s3:GetBucketLocation",

]

resources = [

"arn:aws:s3:::*-testkube-${terraform.workspace}",

]

}

statement {

sid = "S3Object"

effect = "Allow"

actions = [

"s3:GetObject*",

"s3:PutObject*",

"s3:DeleteObject",

]

resources = [

"arn:aws:s3:::*-testkube-${terraform.workspace}/*",

]

}

}

Teskube helm values:

testkube-api:

jobServiceAccountName: testkube-api-server # reuse the service-account from testkube-api

minio:

enabled: true # required to be able to access AWS S3 (minio is used as a proxy)

minioRootUser: ""

minioRootPassword: ""

serviceAccountName: testkube-api-server # reuse the service-account from testkube-api

serviceAccount:

annotations:

eks.amazonaws.com/role-arn: arn:aws:iam::111111111111:role/my-dev-testkube

storage:

endpoint: s3.amazonaws.com

accessKeyId: ""

accessKey: ""

location: eu-central-1

bucket: my-testkube-dev

skipVerify: true

endpoint_port: ""

logs:

storage: "minio"

bucket: my-testkube-dev